Important Security Alert: Issue Found in React (Common Website Technology)

A serious security fault has been discovered in a piece of software called React, which many websites and online services use behind the scenes. This…

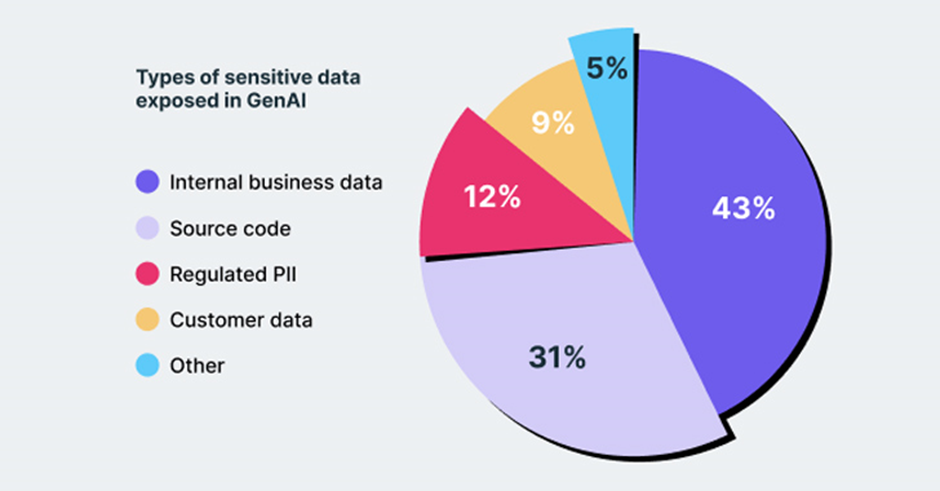

The revolutionary technology of GenAI tools, such as ChatGPT, has brought significant risks to organisations’ sensitive data, new research by browser security company LayerX highlights these potential risks to organisations and lays out how to prevent them from happening. The research titled “Revealing the True GenAI Data Exposure Risk” provides crucial insights for data protection stakeholders and empowers them to take proactive measures.

The report has uncovered significant areas of concern by analysing how 10,000 employees use ChatGPT and other generative AI applications. A particularly alarming discovery indicates that 6% of employees have copied and pasted sensitive data into GenAI, with 4% doing so weekly. This recurring action poses a severe threat to organisations as it increases the likelihood of data being stolen or leaked.

The report tackles crucial questions regarding risk assessment. It provides insights into the overall extent of GenAI usage among enterprise workforces, the proportion of “paste” actions compared to other usages, the number of employees who copy and paste sensitive data into GenAI and how often they do so, the departments that make the most use of GenAI, and the types of sensitive data that are most susceptible to exposure through copying and pasting.

A noteworthy finding reveals a remarkable 44% surge in GenAI usage within the past three months alone. Surprisingly, despite this substantial growth, only an average of 19% of employees in organisations currently utilise GenAI tools. Nevertheless, the risks associated with GenAI usage remain significant, even at its current level of adoption.

Furthermore, the research emphasises the prevalence of sensitive data exposure. Among employees who use GenAI, 15% have been involved in copying and pasting data, with 4% doing so weekly and 0.7% engaging in this behaviour multiple times a week. This recurring pattern highlights the urgent necessity for robust data protection measures to prevent the leakage of sensitive information.

Data protection stakeholders can utilise the insights provided in the report to develop robust GenAI data protection plans. In this era of GenAI, it is crucial to assess the visibility of GenAI usage patterns within an organisation and ensure that existing products can offer the essential insights and protection needed. If the current solutions fall short, stakeholders can consider adopting a comprehensive solution that provides continuous monitoring, risk analysis, and real-time governance for every event during browsing sessions. By implementing such measures, organisations can effectively safeguard their data in the age of GenAI.

https://www.redpacketsecurity.com/new-research-of-employees-paste-sensitive-data-into-genai-tools-as-chatgpt/ – Published June 15th

https://thehackernews.com/2023/06/new-research-6-of-employees-paste.html – Published June 15th